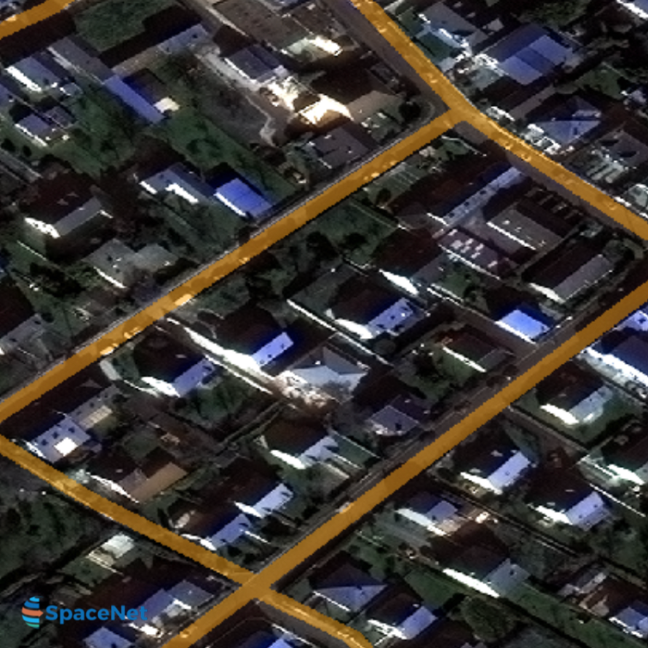

All these results, indeed, have yet to be augmented through the integration of techniques that in the past have demonstrated a capability of improving the detection accuracy of U-net-based footprint detectors. This achievement could pave the way for achieving better performances than the current state-of-the-art. In this work, we focused on the architecture of the U-Net to develop a suitable version for this task, capable of competing with the accuracy levels of past SpaceNet competition winners using only one model and one type of data. In this framework, after reviewing the state-of-the-art on this subject, we came to the conclusion that some improvement could be contributed to the so-called U-Net architecture, which has shown to be promising in this respect. This is also testified by the appearance of online challenges like SpaceNet, which invite scholars to submit their own artificial intelligence (AI)-based, tailored solutions for building footprint detection in satellite data, and automatically compare and rank by accuracy the proposed maps. Whereas a typical approach, until recently, hinged on various combinations of spectral–spatial analysis and image processing techniques, in more recent times, the role of machine learning has undergone a progressive expansion. This difficult problem has been tackled in many different ways since multispectral satellite data at a sufficient spatial resolution started making its appearance on the public scene at the turn of the century. The problem of detecting building footprints in optical, multispectral satellite data is not easy to solve in a general way due to the extreme variability of material, shape, spatial, and spectral patterns that may come with disparate environmental conditions and construction practices rooted in different places across the globe. We present a baseline and benchmark for building footprint extraction with SAR data and find that state-of-the-art segmentation models pre-trained on optical data, and then trained on SAR (F1 score of 0.21) outperform those trained on SAR data alone (F1 score of 0.135).Building footprint detection and outlining from satellite imagery represents a very useful tool in many types of applications, ranging from population mapping to the monitoring of illegal development, from urban expansion monitoring to organizing prompter and more effective rescuer response in the case of catastrophic events. MSAW covers 120 km^2 over multiple overlapping collects and is annotated with over 48,000 unique building footprints labels, enabling the creation and evaluation of mapping algorithms for multi-modal data. The dataset and challenge focus on mapping and building footprint extraction using a combination of these data sources. To address this problem, we present an open Multi-Sensor All Weather Mapping (MSAW) dataset and challenge, which features two collection modalities (both SAR and optical). Despite all of these advantages, there is little open data available to researchers to explore the effectiveness of SAR for such applications, particularly at very-high spatial resolutions, i.e. Consequently, SAR data are particularly valuable in the quest to aid disaster response, when weather and cloud cover can obstruct traditional optical sensors. Conversely, Synthetic Aperture Radar (SAR) sensors have the unique capability to penetrate clouds and collect during all weather, day and night conditions. optical data is often the preferred choice for geospatial applications, but requires clear skies and little cloud cover to work well.

Yet, most of the current literature and open datasets only deal with electro-optical (optical) data for different detection and segmentation tasks at high spatial resolutions.

Within the remote sensing domain, a diverse set of acquisition modalities exist, each with their own unique strengths and weaknesses.

0 kommentar(er)

0 kommentar(er)